Companies keep looking for faster ways to build and deploy new software, especially in the software-as-a-service (SaaS) space. They want fresh features that satisfy evolving customer demands while cutting time-to-market.

Generative AI has evolved as a driving force in this process, redefining how teams create and refine SaaS products. Models like GPT interpret large data sets, produce code snippets and support tasks that once took weeks of manual work.

Below, we look at the impact of generative AI on SaaS development. We examine how it tackles coding chores, prototype design, user support, and data insights.

Why Generative AI Suits SaaS

SaaS companies rely on continuous updates and user feedback loops to stay relevant. Traditional coding methods slow progress if teams wait on specialist intervention for every improvement.

Generative AI tools act as on-demand helpers, speeding up tasks by generating code fragments or design mockups. This speeds iteration so that product owners can test concepts or fix issues without lengthy dev cycles.

Cloud-based platforms make large data sets readily accessible. Generative AI thrives on extensive corpuses, such as documentation, code repositories, and user logs. With the SaaS model, user events or error logs flow in real time, keeping the training data fresh. This synergy allows machine-generated outputs to reflect current patterns, letting the platform adapt.

Engineers also appreciate that generative models remove some repetitive coding. They rely on these automated suggestions for routine patterns, saving human energy for advanced logic. Skilled engineers remain vital for problem-solving and architecture but can leverage AI to boost productivity.

Core Areas of Impact

Automated Code Generation

Developers often face similar urgent tasks like setting up CRUD endpoints, handling authentication, or writing repetitive test scripts. Generative AI can propose stable code structures. Team members supply prompts describing their needs, and the system responds with partial or fully formed code. People then review and refine. This approach slashes the initial creation time for many modules.

Once integrated into an IDE or command-line tool, AI-based code generation also fosters standardization. Teams can align on best practices for naming conventions or error handling because the model suggests consistent patterns. This unity reduces confusion when multiple developers modify the same codebase. Over time, the approach helps shape a more uniform style.

In high-stakes scenarios, staff confirm that generated snippets align with security policies and performance goals. A generative model might skip certain checks or create code that works but lacks depth. Engineers remain gatekeepers, balancing the time saved with the oversight needed. For routine tasks, machine output can prove reliable.

Prototyping User Interfaces

SaaS products need polished interfaces that resonate with end users. That design phase historically involved multiple sketches or wireframes, plus endless revisions.

Now, generative models can propose interface layouts or color schemes based on brand guidelines. A product manager describes the desired flow. The AI then returns mockups that reflect established design patterns.

These prototypes help teams visualize features faster. Even if final designs require manual tweaks, the time from idea to clickable demo. Some advanced systems ingest style guides, brand colors, or user persona details to tailor recommendations further. Designers retain creative control, but they skip the most time-consuming early steps.

Users also benefit from consistent styling across different modules of a SaaS platform. A generative system references prior patterns, suggesting similar navigation or button placements. That consistency fosters a cohesive experience, which aids user onboarding. It spares designers from reinventing layouts every time a new feature arrives.

Data Analytics and Insights

Generative AI also supports data analysis by interpreting logs or metrics to craft concise summaries. Instead of scanning hundreds of lines of telemetry, a product manager might query the AI by asking, “Why are users dropping after the second step of onboarding?” The system inspects usage data and then forms an explanation or suggests a possible fix. This approach speeds discovery by translating raw figures into plain insights.

Companies that track A/B test outcomes may rely on generative AI to clarify which variant performed best. The model can parse event data, check funnel steps, and propose final decisions. Product owners save time by not crunching spreadsheets alone, though they validate suggestions before implementing large changes.

When paired with machine learning SaaS, generative AI can interpret event data faster, suggesting data-driven improvements for your product. It sees patterns in usage logs or error frequency, concluding that users want more help in certain tasks. This feedback loop transforms analytics from a manual chore into a guided process, letting leaders respond swiftly.

Customer Support and Chat

SaaS platforms often field repetitive questions like “How do I reset my password?” or “Why won’t my data import?” Chatbots powered by generative AI can handle these queries around the clock. They learn from existing documentation, support tickets, and knowledge bases to craft relevant answers. That reduces ticket loads, freeing human agents for trickier issues.

Adaptive chatbots even offer proactive tips. Sometimes, if a user logs in from an unknown device, the system might ask if they need a quick tutorial or if they intend to import specific data. Over time, the chatbot refines its approach, spotting common user pain points. This fosters a better user experience, increasing satisfaction and fewer escalations.

Of course, businesses must ensure that these bots do not misrepresent important details. AI can generate plausible but incorrect statements if it lacks the right context. Periodic reviews help confirm that the system’s knowledge base remains current and accurate. Thorough curation of relevant documents or FAQs supports better outcomes.

Shifting Development Workflows

Blending Human and Machine Efforts

SaaS engineering teams may restructure roles to make space for generative AI. One approach sees a developer start by prompting the model for initial code or interface suggestions. Then, the developer refines outputs, verifying logic, security, and performance.

This loop merges machine speed with human oversight. Productivity rises because no one starts from scratch. That synergy needs careful orchestration. If staff leans too heavily on AI, mistakes could happen. Manual reviews remain essential for advanced logic, especially in sensitive areas like user data storage.

Over time, the model’s suggestions may improve as it learns from feedback. A healthy cycle emerges where developers shape the AI’s training set and adapt final results as needed.

Shorter Iteration Cycles

The SaaS model typically runs on sprints or rapid cycles. Generative AI fits perfectly by chopping down the coding or design time for each sprint. After defining user stories, teams let the model propose code stubs or interface wireframes. Within hours, prototypes appear, letting QA and product managers jump into testing or user feedback.

As the cycle continues, the AI adjusts to new inputs or bug reports. If a scenario fails in testing, the model can suggest an alternative. That keeps teams from rewriting major portions manually. Over time, new features ship in days rather than weeks. Marketers can schedule updates more precisely because dev tasks lose much of their unpredictability.

Benefits for SaaS Companies

Faster Time to Market

Generative AI removes bottlenecks that block progress. Teams prototype faster and push code forward with fewer staff hours. Leaders can pivot or test ideas without huge overhead, releasing alpha or beta versions quickly. That agility matters in a field where fresh competitors might appear or existing clients demand updates.

When a product evolves in near-real time, it encourages more user involvement. Beta testers see new features monthly or even weekly. Their feedback loops feed back into the generative system, refining further improvements. This dynamic approach fosters ongoing engagement, making users feel heard and valued.

Consistent Branding

User interfaces produced by generative models lean on existing style references. Designers can load brand elements or color palettes, ensuring consistency across modules. Whether a developer is adding a new dashboard or a marketing page, the AI references the same brand voice. This unifies the product’s look and feel, elevating brand perception.

If multiple dev teams work on different modules, they might otherwise create inconsistent styles. Generative AI polishes these rough edges with standardized components. Over time, the product appears complete, even if the staff rotates or if new devs join. That brand stability benefits user adoption and recognition.

Democratizing Development

By lowering coding barriers, generative AI invites non-technical roles to shape software. A product manager might detail a feature’s logic, letting the AI produce a functional prototype. A business analyst might tweak a data pipeline with minimal engineering help. This inclusive model fosters cross-functional synergy, removing the classic dev bottleneck.

In organizations with limited developer capacity, marketing or ops staff can step up to create minor features. They do not need advanced coding knowledge, just a workable understanding of prompts. This frees core engineers to tackle deeper architecture tasks. The entire enterprise runs more smoothly because fewer tasks queue up for specialist review.

Generative AI Challenges and Concerns

Accuracy and Validation

Generative AI can produce code or text that seems correct yet misses certain details. A snippet might compile but fail edge cases in real usage. Without adequate testing, these errors slip through to production. Skilled developers must review outputs, ensuring they pass functional tests and align with software development best practices.

Similarly, UI prototypes may look fine superficially but cause accessibility problems or break on certain screens. AI-based suggestions do not guarantee compliance with every standard. User feedback and QA remain essential to catch shortfalls. Over time, curated training data can reduce these flaws, but they never vanish entirely.

Data Privacy and Compliance

When generative AI processes user logs or support tickets, it gains access to private info. If strong security measures do not govern the model, it may store or leak sensitive data. SaaS providers must confirm that the pipeline respects relevant regulations, such as GDPR or HIPAA, if health data is involved.

Moreover, some generative solutions operate on external servers owned by third parties. If they pass raw user data through those services, they might violate privacy commitments. Organizations should consider self-hosted or encrypted approaches for extremely sensitive contexts. Clear policies and thorough vendor assessments mitigate these risks.

Bias and Ethical Implications

Generative models learn from existing data sets, which can contain biases. That might lead to UI proposals or code suggestions that marginalize certain user groups or push certain behaviors.

If the training data skews to certain demographics, the system’s outputs follow it. SaaS teams must track these issues and correct them, perhaps filtering the data or adjusting the model.

Ethical usage also means disclosing AI’s role. If a chatbot handles user queries, it should note that responses come from machine intelligence. Customers or regulators may expect disclaimers. Transparency fosters trust and allows users to weigh how much they rely on automated output for important decisions.

Generative AI Implementation Strategies

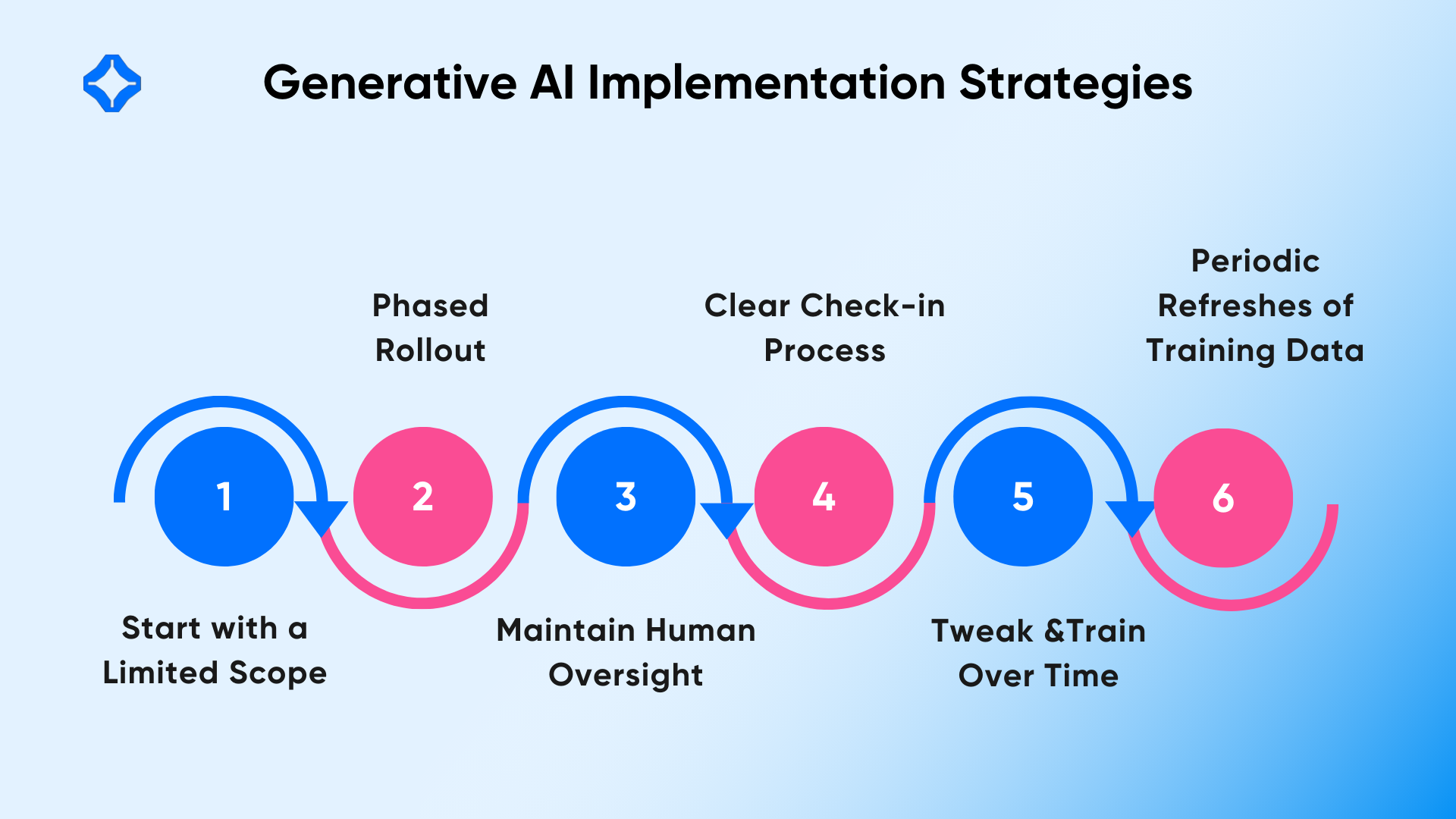

Start with a Limited Scope

SaaS leaders should pick one or two areas where generative AI can offer fast wins. Code generation for backend services or basic UI prototypes, for example, might be manageable. Teams gather metrics like hours saved or errors prevented. Early success fosters trust among developers, leading to broader adoption.

A phased rollout also prevents big disruptions. If an AI-based approach falters or introduces critical flaws, the damage stays contained. Organizations tweak the setup, refine training prompts, or clarify best practices. That incremental approach keeps staff from feeling overwhelmed by changes to their usual workflows.

Maintain Human Oversight

Even the best generative models can produce subtle bugs or misrepresent data. Skilled developers must remain in the loop, reviewing outputs and verifying correctness. A code snippet that compiles might have hidden logic holes. Product managers check prototypes to confirm they match user stories accurately.

Teams should define a clear check-in process. The AI proposes solutions, staff reviews them, and the pipeline merges changes only after sign-off. This method keeps the system from “running wild” or shipping half-baked features. Over time, patterns emerge about which tasks AI handles reliably.

Tweak and Train Over Time

Models learn from the feedback of users. If certain suggestions cause repeated problems, staff can label them as incorrect. The system then updates its weights or references. This continuous improvement cycle refines performance, making the AI more aligned with the company’s coding standards or design style.

Periodic refreshes keep the training data relevant. As the SaaS product evolves, older logs or outdated frameworks might mislead the model. Ongoing curation ensures the AI references current best practices. Because SaaS never stands still, the model’s knowledge should also stay fresh.

Generative AI in the Future

Some providers plan a deeper fusion of generative AI across the entire software lifecycle. From concept to deployment, the AI might suggest architecture, optimize container usage, or test advanced edge cases. Real-time collaboration might allow developers to chat with the model about errors or design conflicts. The boundary between human planning and machine output keeps shrinking.

Advanced AI might also unify user analytics with dev feedback. If logs indicate confusion in a specific feature, the model might recommend an alternative design or code fix. This closes the gap between product insight and development action, making SaaS iterations near-instant. However, caution remains essential since an overly automated pipeline can miss unique edge conditions.

Some organizations consider building domain-specific generative models that deeply understand their vertical. A healthcare SaaS might train on HIPAA-compliant data, while a fintech platform references specialized finance logs. That approach refines suggestions even further but demands robust data governance.

Conclusion

Generative AI reshapes how SaaS teams create, test, and launch software. It lessens coding by producing important components or design frameworks, allowing experts to focus on specialized logic and architecture. The technology also speeds up user research, data analysis, and support tasks, building a streamlined development environment.

In a competitive SaaS market, the ability to iterate quickly sets winners apart. By leveraging machine-driven code generation or user interface prototypes, product managers adapt to demands overnight. The result is a more agile operation that responds to real-time user feedback. Generative AI offers a bold leap, but with vigilance and training, it can transform SaaS product development for good.

FAQs

Traditional coding tools handle tasks like syntax checks or auto-completion. Generative AI produces entire code blocks or design suggestions, often derived from large training sets. It guesses your intent rather than just automating small bits.

It automates repetitive parts and speeds up prototypes, but experienced engineers still design system architecture, validate security, and manage edge cases. AI acts as an assistant, not a complete substitute.

Organizations must ensure that personal or sensitive data is not exposed to external AI providers. Some host models in-house to safeguard logs. Also, thorough privacy checks and anonymized training sets can minimize security risks.